Sequential data is vital in the field of machine learning because of many of its employs and challenges. Sequence models and Recurrent Neural Network are the foundation of the current trending technologies like language translation, voice recognition, and many more.

Sequence models are intended to operate on data, which comes in sequence data. This is data of time series, textual, speech, and video types. An example of an easy task is word prediction where the model tries to guess the next word in a given sentence. Unlike other models that use data separately sequence models take into account the order and format of data.

Speech Recognition: Transcription of natural language from spoken form using audio signals.

Sentiment Classification: Learn about text classification to comprehend sequences of words when defining the sentiment of a particular piece of text.

Video Activity Recognition: Recognizing tasks in videosections through sequences of frames analysis.

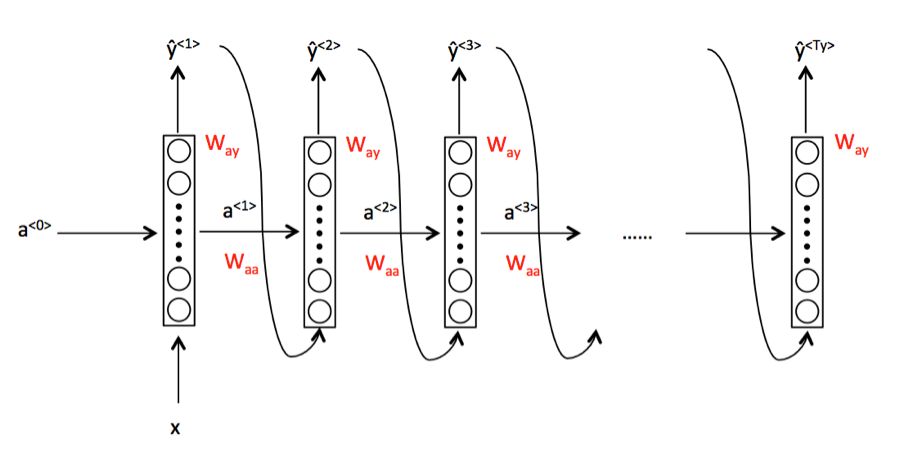

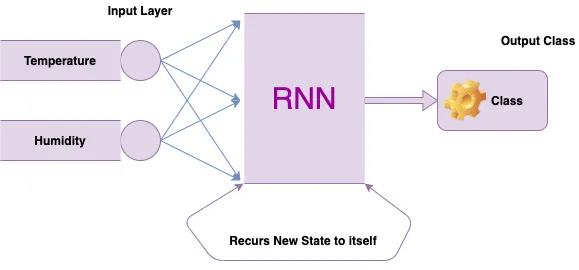

RNN is basically a neural network architecture that is particularly suited for processing sequential data. They preserve a state through which data from previous inputs is stored thus can be useful in tasks that involve sequences where context is considered.

A Recurrent Neural Network or the RNN has a mechanism that can handle the sequential dataset. This is also the gist of the problem that the recurrent neural network is trying to address.

Internal Memory: RNNs maintain past inputs so that it can use it in the current processing task.

Sequential Data Processing: Best for sequences where order of data is important for example text or spoken word analysis.

Dynamic Processing: Active in modifying information in memory whenever it receives new data to operate on.

One to Many: Processes one input and generates several outputs as its end product. Example: Image captioning.

Many to One: Has more than one input and one output only in the case of the last function mentioned. Example: Sentiment classification.

Many to Many: Consumes a number of inputs and gives a number of outputs. Example: Machine translation.

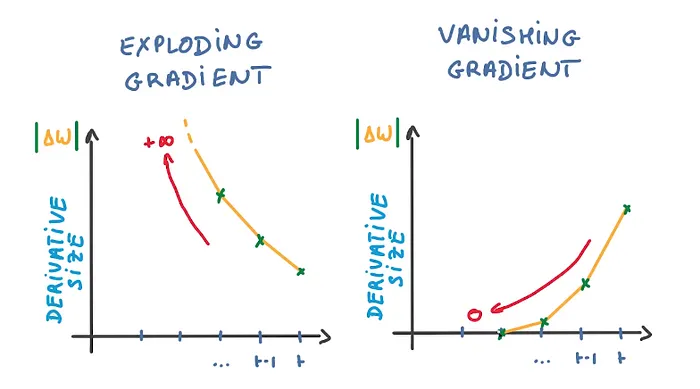

It is known that vanishing gradient problem occurs which results in the inability of the traditional RNNs to capture long dependencies. This is achieved by adding a memory cell in LSTMs so that they can be able to retain some information over an extended duration.

These gates assist LSTMs to perform better get a hold of long term dependencies than the regular RNNs.

Sequence models and RNNs are critical for the machine learning of sequential data, with special reference to LSTMs. Thus, as they can keep and use context over extended periods, networks are valuable for tasks that involve temporal relations. Starting with the speech recognition feature to language translation, RNNs and LSTMs are still even today shifting the relationship we have with technology.